TL;dr For cross-platform training (Eg: Windows code in Ubuntu), as of right now, there doesn't seem to be much support at the moment. But things are expected to become better shortly with upcoming Windows upgrades. (Please help me understand if there are better options).

Given the remote work scenario & my stay away from college, my thesis advisor offered to run my Deep Learning code (for Model Training) on his machine. But the problem was I used a Windows machine & he uses a Ubuntu machine. So, I had to ensure that code would work perfectly to prevent the headache of back & forth communication for debugging.

I tried a few approaches & discuss my experience here.

- Perform a Ubuntu + Windows Dual Boot

- Use Windows Subsystem for Linux (WSL)

- Use a Virtual Box

- Simply, replicate the host environment using yml file and replicate the environment in the target machine.

- Use Docker.

Using a Dual Boot

The most straightforward (albeit complex) way is Dual Booting. But it is painful in the sense that I need to partition the hard disk, install another OS leading up to a potential slowdown of my already slow computer. Thinking we could better, I explored other options.

Using Windows Subsystem for Linux

Microsoft first announced WSL in 2015 - a Linux kernel running on top of a lightweight hypervisor (HyperV) so as to emulate a Linux Development Environment inside a Windows Platform. It allows developers to run a GNU/Liux environment including most command-line tools, utilities, and applications without the overhead of a traditional virtual machine or dualboot setup.

Microsoft first announced WSL in 2015 - a Linux kernel running on top of a lightweight hypervisor (HyperV) so as to emulate a Linux Development Environment inside a Windows Platform. It allows developers to run a GNU/Liux environment including most command-line tools, utilities, and applications without the overhead of a traditional virtual machine or dualboot setup.

The best way to learn more is of course the official documentation. In 2020, Microsoft released WSL2 which had more capabilities and improvements.

After some tinkering, I found out that at the moment (Feb 2021), WSL2 doesn't have support for accessing GPU in its stable releases yet (although you can access GPU via WSL2 by signing up for Windows Insider Program).

Using Virtual Machines

Virtual Machines (VMs) for most parts has been the stop-shop solution for emulating one OS inside another. Although they provide complete isolation from Host OS they have significant demerits like a) Slow & Inefficient (compared to native OS) b) Large File Size b) Resource intensiveness.

I downloaded a copy of VMWare Workstation Player and after tinkering around a lot, it turns out again that at the moment, both Oracle Virtual Box and VMWare Workstation Player (free personal edition) don't have support for GPU access since they are Type-2 hypervisors.

Of course, a Type-1 hypervisor-based tech like vSphere could possibly work but it's free version again doesn't offer GPU capabilities.

NOTE:

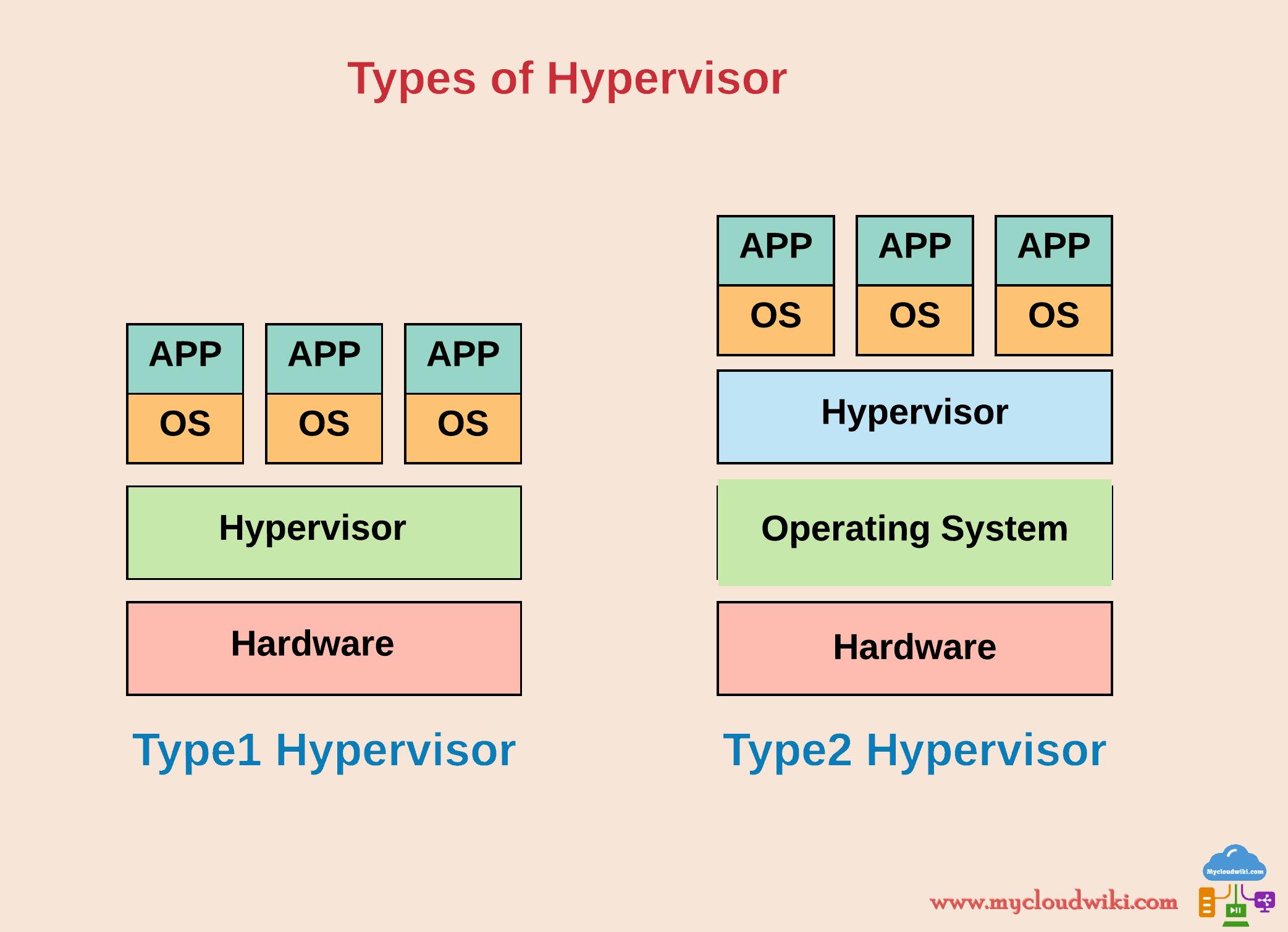

- A hypervisor is an intermediate software layer on top of which the Guest OS is run and separates the Host Machine/OS & Guest OS.

- A Type-1 Hypervisor (aka Bare-Metal Hypervisor) runs directly on the host machine's physical hardware and doesn't need to run on top of an underlying OS. This increases security via isolation of all Guest OSes.

- A Type-2 Hypervisor is installed on top of a Host OS and relies on the host OS to communicate with CPU, GPU, Network & Storage resources. They are less secure (&hence less expensive) and are generally used for non-production workloads.

Using yml file to replicate the conda environment

Of course, this looks like the most simple solution out of all options- after all, we only need a couple of lines of code (in host machine conda env export > env.yml && in target machine conda env create -n myenv -f env.yml).

But it turned out that given the spaghetti nature of the package installation, there was a lot of version conflict errors while trying to replicate the environment. So, I had to use --from-history and flexible channel_priority option while exporting environment. Eventually it was not an exact 'version to version' replication. With luck, the code might run without errors, but future package upgrades (knowingly/unknowingly) might break something.

Also, I still needed to figure out a way to run my code in a Ubuntu Machine (having NVIDIA GPU) for a few iterations to ensure that nothing breaks. So, while this was a quick fix solution, I wanted to explore the last option to see if we can do better.

Using Docker

For the uninitiated, Docker is the quintessential solution to the typical "It worked in dev but not in QA" problem.

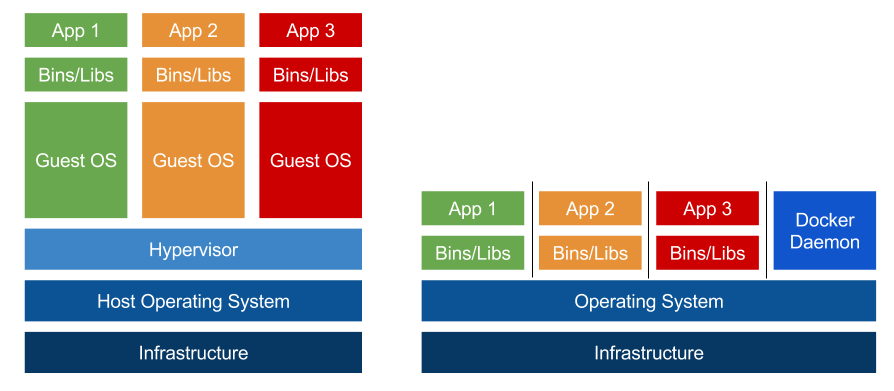

A container is a standard unit of software that packages up code and all its dependencies so the application runs quickly and reliably from one computing environment to another. A Docker container image is a lightweight, standalone, executable package of software that includes everything needed to run an application: code, runtime, system tools, system libraries and settings. Container images become containers at runtime and in the case of Docker containers - images become containers when they run on Docker Engine.

Docker container is made up of layers of images, binaries packed together into a single package. The base image contains the operating system of the container, which can be different from the OS of the host. The OS of the container is in the form of an image. This is not the full operating system as on the host, and the difference is that the image is just the file system and binaries for the OS while the full OS includes the file system, binaries, and the kernel. On top of the base image are multiple images that each build a portion of the container.

While VMs takes minutes to spin up and have higher sizes, it just takes a few seconds to spinup a container with lesser space requirements but the trade-off is the lack of kernel-level isolation in containers( i.e although containers are inherently secure, kernel-level vulnerabilities on Base OS can result in security exploits). Another downside is that unlike VMs, containers can't be used across OS platforms (For eg, it is not possible to use a container with Ubuntu base image to run on a Windows machine).

To know more about docker, check out Docker, Kubernetes, Microservices, Containers for Beginners, FreeCodeCamp's Docker Course and Docker Documentation (Tutorials, Syntaxes & Deeper explanations).

It turns out that the NVIDIA-Docker Image (the library that facilitates GPU access from inside containers) doesn't have support for Windows Machines (refer this: NVIDIA DOCKER - No Windows Support and this: NVIDIA-DOCKER FAQs).

The recurring problem with WSL2, VMs & Docker turns out to be the lack of support for GPU access via hypervisors. Although the Windows Insider Program offers a way out, it seems that people who want a stable version have to wait for a few more months (at the max a year or two maybe?).

So, finally what happened?

I ended up installing Dual Boot Ubuntu since there was no other way around to emulate a Ubuntu environment with GPU access inside a Windows Machine. Even though "Windows-Dev + Ubuntu-Deploy" was not possible, still an "Ubuntu-Dev + Ubuntu-Deploy" paradigm would be way simpler with the usage of Docker containers.

After building the container & a few test run iterations, the container could finally be run on the target machine.

Using a docker container saved us from the painful CUDA & CUDNN installations as well as several other package version conflict errors. The only steps to be followed on the target machine involved a) Installing Drivers b) Installing Docker c) Running the docker container.

In hindsight, I realised that instead of Dual Boot installation, a simpler way would involve: a) Renting a small AWS EC2 instance for developing Ubuntu code. b) Rent a cheap GPU instance for an hour to test run the docker container & fine tune the code.

NOTE: In an upcoming post, I am planning to expand on how I utilised Docker for training Deep Learning Models and I will update the link here once it's out.

Conclusion

Since I ended up installing Dual boot anyway, was the entire exercise futile? Well, technically yes. But I am grateful since the wild goose chase helped me learn a lot of tools like Ubuntu (& using terminals instead of GUI - which is so cool btw), Docker, WSL and so many more. I also had a new found respect & admiration for Software Developers & Machine Learning Scientists who would have never had these options just a few years ago & yet they endured.